I'm going to isolate a few things and talk about what focus can tell us:

make- Apple

model - iPhone 13 Pro

lens_model - iPhone 13 Pro back triple camera 5.7mm f/1.5

Cell phone camera confirmed

The iPhone 13 Pro and 13 Pro Max are the only iPhones with three cameras. These models feature a main wide-angle lens, an ultrawide lens and a telephoto camera with 3x optical zoom.

The f/1.5 lens is the wide angle lens.

So... if this were a distant object, why not choose the telephoto lens?

Because it happened too fast? But if it happened that fast did the Photographer see it naked eye at the time? This

seems to be the claim. How else would the photographer have been able to estimate the size and distance? He would have to see it, naked eye. But a Pro didn't switch to the telephoto lens if he had that time?

exposure_time -1/6579

Motion blur will be minimal

focal_length35efl - 5.7 mm (35 mm equivalent: 26.0 mm)

The 35 mm equivalent is a handy thing to know, but I'll be using the sensor size for the wide angle camera, which is 1/1.9", and the real focal length, later.

lens_info

1.570000052-9mm f/1.5-2.8

f_number - 1.5

Stopped up all the way

focus_distance_range - 0.28 – 0.72 m

The old school definition of focusing distance

Focusing distance is the distance from the focusing plane to the subject.

Focus distance range is a new one on me

From what I understand from some research just today, this indicates the depth of field for the lens at the moment the photo was taken: considering the focal length, the f-stop, and focus adjustment. In other words the perfect point of focus was at approximately(!) 44 cm. The question is, is this accurate? There's abundant discussion on the Internet about whether this can be trusted. I'm afraid the only way to tell for sure is to test an iPhone 13 Pro back triple camera with the 5.7mm f/1.5 selected.

I haven't seen a discussion yet about cell phone cameras or this model in particular.

circle_of_confusion - 0.007 mm

hyperfocal_distance - 3.29 m

I'm taking this to mean the hyperfocal distance for this lens while set to f/1.5.

What would all this tell us?

First, see a discussion here about hyperfocal distance:

https://www.metabunk.org/threads/go...-academy-bird-balloon.9569/page-4#post-220366

If we accept this as accurate:

focus_distance_range - 0.28 – 0.72 m

The lens was focused pretty close. About(!) 44 cm (19 inches). Depth of field (the zone which appears to us humans to be in good focus) would be from 11 to 28 inches.

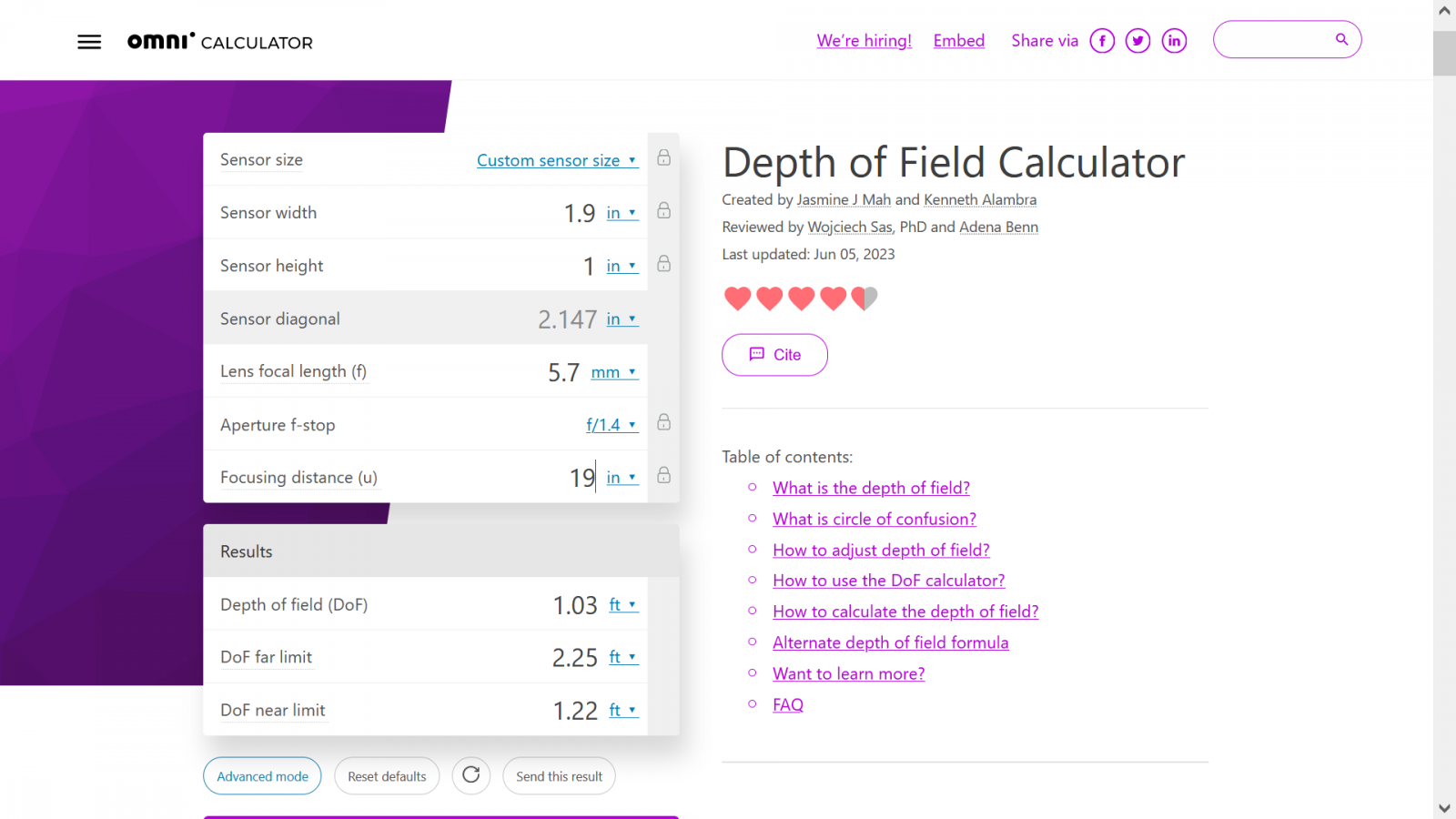

I'm going to test to see if this makes sense by using a depth of field calculator. I've entered the sensor size and the true focal length. The closet f-stop I can select is f/1.4:

https://www.omnicalculator.com/other/depth-of-field

https://www.omnicalculator.com/othe...1.4142,aperture:1!!l,focus_distance:19!inch!l

https://www.omnicalculator.com/othe...1.4142,aperture:1!!l,focus_distance:19!inch!l

Yeah, seems to make sense. But doesn't prove it's accurate of course.

If we can trust that the perfect point of focus is 19 inches, this puts it well short of the hyperfocal distance of this lens at f/1.5 - which is 3.29 meters.

So what does that mean? It means a distant object would be in poor focus.

Let's look at the high

er resolution photo of the plants in the foreground and see what it

looks like.

View attachment fernando.webp

To be honest, the resolution is still way too low for me to say where the best focus is.

What about the best resolution version we have of the Orb/butterfly?

Now we're getting somewhere. Ignoring resolution, the orb/butterfly is in better focus. Notice the details on the butterfly wings. The scales are clearly visible with a well defined border between the scales. The forest shows little detail.

This is more consistent with the object being close and small.

I invite a counter-argument. But at least it would be a fact based argument rather than an argument by assertion.

If we had the full resolution photo, this issue would be more clear cut.